In 2025, the landscape of digital and physical fakes has been significantly transformed by the capabilities of artificial intelligence (AI). As technology continues to progress at an unprecedented pace, AI – powered tools have both enhanced and complicated the issue of fake creation.

## I. Image and Video Forgery

### A. Deepfake Technology Advancements

In 2025, deepfake technology has reached new heights. AI algorithms are now able to generate hyper – realistic images and videos with astonishing ease. These algorithms analyze vast amounts of real – life data, such as facial expressions, body language, and speech patterns. For example, a deepfake video can be created to make it seem as if a well – known public figure is saying something they never actually said. The AI system first studies hours of the person’s existing videos, learning their unique facial features, how their mouth moves when they speak, and even their idiosyncratic gestures. Then, it can synthesize a new video with a different script, making the public figure appear to be delivering a completely fabricated message.

### B. Implications for Media and Entertainment

This has far – reaching implications for the media and entertainment industries. In the media, false news stories can be more easily fabricated. A deepfake video of a political leader making controversial statements can quickly spread on social media platforms, causing public panic and misinformation. In entertainment, while deepfakes can be used for creative purposes such as replacing actors in certain scenes without reshoots, they also raise ethical questions. For instance, an actor’s image could be used without their consent in a different project, blurring the lines of privacy and intellectual property rights.

## II. Audio Forgery

### A. Voice Synthesis Evolution

AI – driven voice synthesis has also made great strides in 2025. It is now possible to create a voice clone of almost anyone. By analyzing a relatively small amount of audio data of a person’s voice, an AI system can generate a synthetic voice that sounds exactly like the original. This technology has various applications, but it also has a dark side. For example, criminals could use voice clones to make fraudulent phone calls. They could pose as a bank customer’s friend or family member, asking for sensitive information such as bank account details or passwords.

### B. Impact on Security and Privacy

The rise of audio forgery poses a significant threat to security and privacy. In corporate settings, a fake voice could be used to manipulate business decisions. A voice – cloned executive could order employees to transfer large sums of money to a fake account. Additionally, in personal communication, the authenticity of voice messages becomes suspect. People may no longer be able to trust that the voice they hear on the phone is truly that of the person they think it is.

## III. Document Forgery

### A. AI – Assisted Text Generation

AI – powered text generation has enabled more sophisticated document forgeries. In 2025, AI systems can generate text that is almost indistinguishable from that written by a human. These systems are trained on a wide range of texts, including different writing styles, genres, and languages. For example, a forged contract could be created using AI. The system could mimic the language and formatting of a real contract, making it difficult for the untrained eye to detect the forgery.

### B. Challenges in Authentication

Authenticating documents has become a major challenge. Traditional methods such as signature verification are no longer sufficient. AI – generated signatures can be replicated with a high degree of accuracy. As a result, new authentication technologies are needed. Biometric – based authentication methods, such as fingerprint or iris recognition for digital documents, are being explored, but they also have their own limitations and vulnerabilities.

## IV. Countermeasures Against AI – Driven Fakes

### A. Detection Technologies

In response to the growing threat of AI – created fakes, detection technologies have also evolved in 2025. For deepfake images and videos, researchers have developed algorithms that can analyze subtle inconsistencies in facial features, lighting, and motion. These algorithms work by comparing the characteristics of the suspect media with a database of known real – life images and videos. Similarly, for audio forgery, techniques are being developed to analyze the unique characteristics of a natural voice, such as micro – tremors and pitch variations, which are difficult to replicate exactly in a synthetic voice.

### B. Legal and Regulatory Frameworks

Governments around the world are also stepping in to create legal and regulatory frameworks. Laws are being proposed to make the creation and dissemination of certain types of fakes illegal. For example, making it a crime to create and spread deepfake videos that could cause harm to public figures or incite violence. However, implementing these laws is not without its challenges. Defining what constitutes a harmful fake and determining the appropriate punishment are complex issues that require careful consideration.

### C. Public Awareness and Education

Another important aspect of combating AI – created fakes is public awareness and education. In 2025, campaigns are being launched to educate the public about the existence and potential dangers of fakes created using AI. People are being taught how to be more critical of the media they consume, such as looking for signs of deepfake manipulation in videos or being cautious of unsolicited voice – based requests for personal information.

## V. Common Problems and Solutions

### Problem 1: Difficulty in Identifying Deepfake Videos

– **Solution**: Regularly update deepfake detection software. These software programs should be trained on a continuous basis with new and diverse deepfake examples as well as real – life media. Additionally, users should be encouraged to look for tell – tale signs such as inconsistent facial expressions, unnatural eye movements, or lighting that does not match the surroundings in a video. For example, if a person’s eyes seem to be moving in an odd way compared to their facial expressions, it could be a sign of a deepfake.

### Problem 2: False Alarms in Audio Forgery Detection

– **Solution**: Develop more advanced algorithms that can distinguish between natural variations in a person’s voice and actual audio forgery. This can be achieved by collecting a larger and more diverse dataset of natural voice variations, such as those caused by different emotional states, health conditions, or environmental factors. Also, implement a multi – factor authentication system for voice – based transactions. In addition to voice recognition, other forms of authentication like one – time passwords can be used.

### Problem 3: Inability to Keep Up with New AI – Driven Forgery Techniques

– **Solution**: Foster a community of researchers and security experts who can share information about new AI – driven forgery techniques as soon as they are discovered. This can be done through international conferences, online forums, and research collaborations. Governments and private companies should also invest in research and development to stay ahead of the curve. For example, they can fund projects that aim to predict future forgery techniques based on the current trends in AI development.

### Problem 4: Lack of Standardization in Document Authentication

– **Solution**: Governments and international organizations should work together to establish standard authentication protocols for digital and physical documents. These standards should include requirements for the use of advanced encryption techniques, biometric authentication, and digital watermarking. For example, all official government documents could be required to have a unique digital watermark that can be easily verified using a standardized verification tool.

### Problem 5: Public Resistance to New Authentication Methods

– **Solution**: Conduct comprehensive public awareness campaigns to educate people about the importance of new authentication methods. These campaigns should not only explain the benefits of these methods in terms of security but also address any concerns about privacy or inconvenience. For example, if a new biometric – based authentication method is being introduced, the campaign could emphasize how the biometric data is stored securely and used only for authentication purposes.

In conclusion, in 2025, the role of artificial intelligence in creating fakes is a complex and multi – faceted issue. While AI has enabled the creation of highly sophisticated fakes, it has also spurred the development of countermeasures. Through a combination of detection technologies, legal regulations, and public education, society is working towards mitigating the risks associated with AI – driven fakes. However, the battle against fakes is an ongoing one, and continuous efforts are required to stay one step ahead of the forgers.

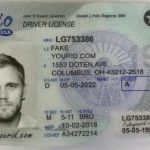

Fake ID Pricing

unit price: $109

| Order Quantity | Price Per Card |

|---|---|

| 2-3 | $89 |

| 4-9 | $69 |

| 10+ | $66 |